An excellent WAN optimization answer will permit you to prioritize site visitors, and guarantee a certain amount of to be had bandwidth for task vital applications. complete WAN optimization answers allow a commercial enterprise to do a whole lot extra than virtually queue the horrific traffic. they are able to block unwanted (in and outbound) traffic, permit it at sure time for the duration of the day, give precedence to positive hosts, and put in force many different associated policies. They will optimize the real visitors as properly, providing decrease latency and higher throughput for the maximum essential packages.

WAN optimization, additionally called WAN acceleration, is the category of technology and techniques used to maximize the efficiency of records go with the flow across a extensive area network (WAN). In an organization WAN, the aim of optimization is to growth the velocity of access to critical packages and facts.

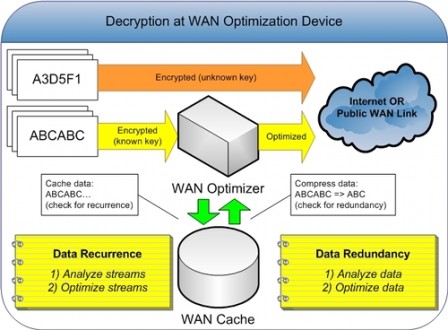

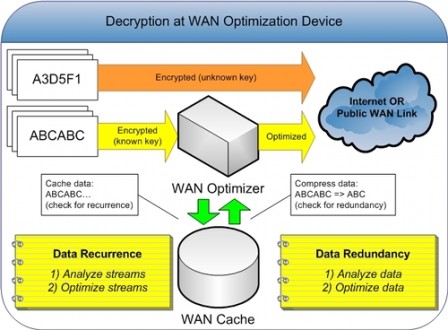

|

| Fig 1.1-WAN optimization (NB) |

WAN performance issues

Let’s start by surveying two major causes of WAN performance problems

Latency: This is the back-and-forth time resulting from chatty applications and protocols, made worse by distance over the WAN. One server sends packets, asks if the other server received it, the other server answers, and back and forth they go. This type of repeated communications can happen 2,000 to 3,000 times just to send a single 60MB Microsoft PowerPoint file. A somewhat simple transaction can introduce latency from 20 ms to 1,200 ms per single file transaction.

TCP window size: Adding more bandwidth won’t necessarily improve WAN performance. Your TCP window size limits throughput for each packet transmission. While more bandwidth may give you a bigger overall pipe to handle more transactions, each specific transaction can only go through a smaller pipe, and that often slows application performance over the WAN.

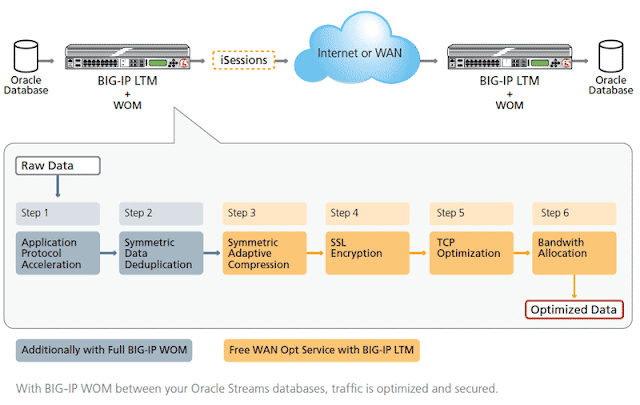

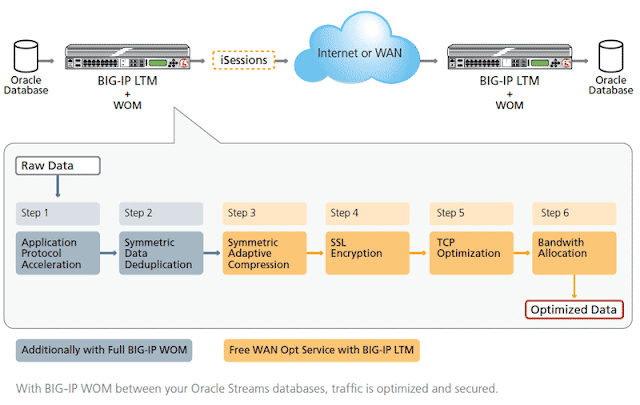

|

| Fig 1.2-WAN optimization (NB) |

Data streamlining

Don’t resend redundant data: A process known as data de-duplication removes bytes from the WAN. Data that is accessed repeatedly by users over the WAN is not repeatedly resent. Instead, small 16-byte references are sent to let SteelHead know that this data has already been sent and can be reassembled locally. Once sent, this data never needs to be sent again.

Scalable data referencing looks at data packets: Let’s say a user downloads a document from a file server. At the sending and receiving locations, Steel Head sees the file and breaks the document into packets and stores them. Then the user modifies the document and emails it back to 10 colleagues at the file’s original location. In this case the only data sent over the WAN are the small changes made to the document and the 16-byte references that tells the Steel Head device at the other end how to reassemble the document.

Steel Head cares about data: Data is data to Steel Head, no matter what format or application it comes from. That means far less of it needs to be sent across the WAN. As an example, imagine how many times the words “the” and “a” appear in files from various applications. Steel Head doesn’t care; these bytes look the same and therefore need not be sent. This type of de-duplication can remove 65–95% of bytes from being transmitted over the WAN.

Below is the example of the data-stream using riverbed Steel head devices

|

| Fig 1.3-WAN optimization (NB) |

Transport streamlining

The fastest round trip is the one you never make: Transport streamlining makes TCP more efficient, which means fewer round trips and data per trip. For example, traditional TCP does what’s known as a “slow start process,” where it sends information in small chunks and keeps sending increasingly larger chunks until the receiving server can’t handle the chunk size. Then it starts again back at square one and repeats the process. Transport streamlining avoids the restart and just looks for the optimal packet size and sends packets only in that size.

Combine data streamlining with transport streamlining for staggering WAN efficiency: Thanks to transport streamlining you’ll be making fewer round trips (up to 98% reduction), and you’ll be sending more data per trip. This adds up to a much higher throughput. But it’s bigger than that because a single packet can virtually carry megabytes of data by repacking the payload with 16-byte references as opposed to repetitive data.

Application streamlining

Lastly, application streamlining is specially tuned for a growing list of application protocols including CIFS, HTTP, HTTPS, MAPI, NFS, and SQL. These specific modules understand the chattiness of each protocol and work to keep the conversation on the LAN, where chattiness is not a factor and therefore creates no latency, before making transmissions over the WAN.